How to Migrate from Convex to AWS

Move from Convex to your own AWS account for infrastructure ownership

Convex is a reactive backend platform that emphasizes TypeScript integration and real-time data. Your queries automatically re-run when data changes, and the type safety is genuinely good.

The trade-offs become clear at scale, where Convex only runs in US regions with no multi-region option, and the proprietary document database means no SQL, no JOINs, and queries written in Convex-specific syntax that doesn't transfer anywhere else. If you outgrow it or need to integrate with existing infrastructure, you're looking at a full rewrite.

With AWS, RDS runs PostgreSQL in any region you need, and your data is portable so you can export it, query it with psql, or connect any tool that speaks Postgres. Your code uses standard patterns, so if you move providers again someday, it's a migration, not a rebuild.

The traditional path to AWS means learning Terraform or CloudFormation and building your own backend from scratch. That's a big jump when you're used to Convex's developer experience.

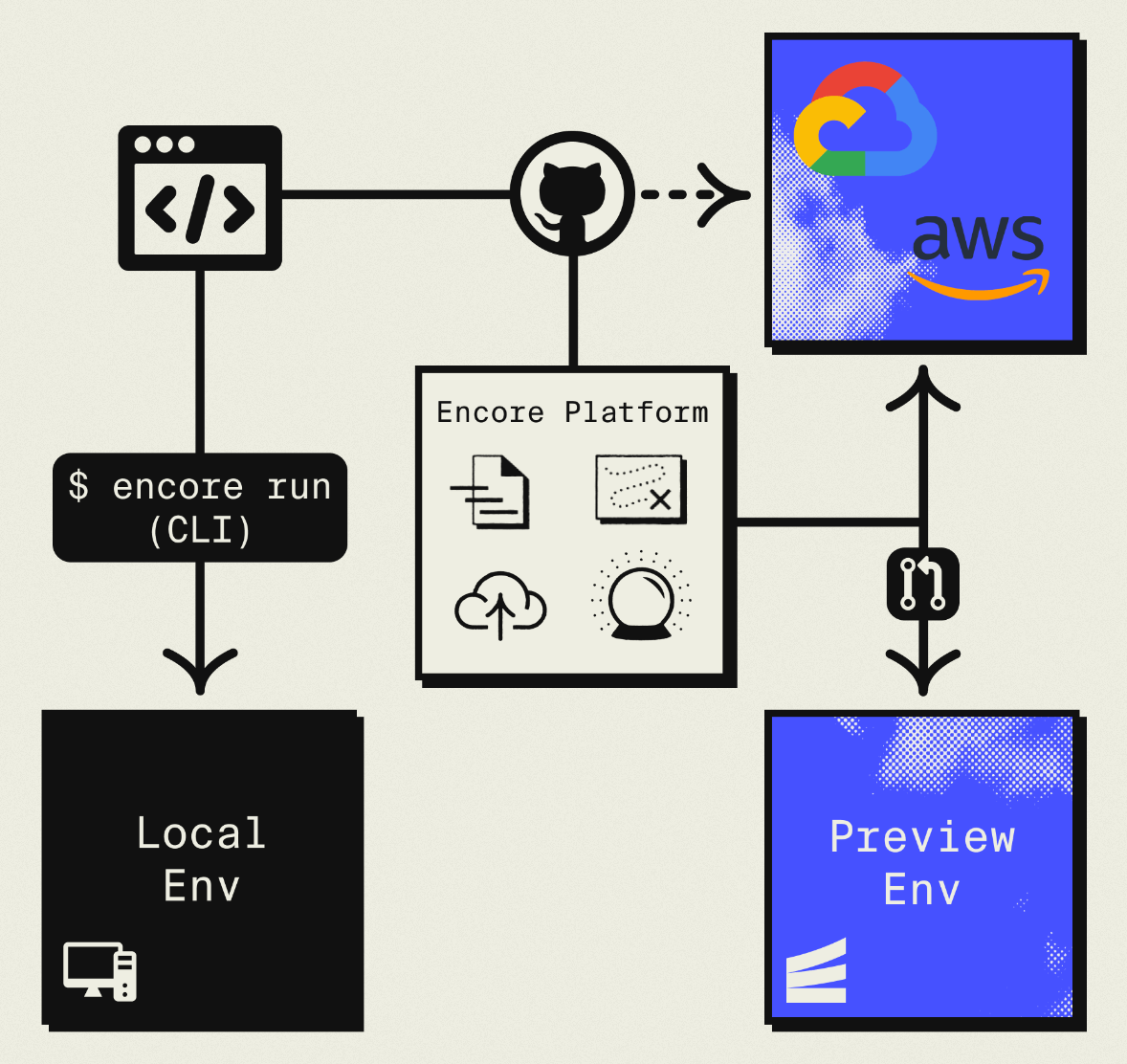

This guide takes a different approach: migrating to your own AWS account using Encore and Encore Cloud. Encore is an open-source TypeScript backend framework (11k+ GitHub stars) where you define infrastructure as type-safe objects in your code: databases, Pub/Sub, cron jobs, object storage. Encore Cloud then provisions these resources in your AWS account using managed services like RDS, SQS, and S3.

The result is AWS infrastructure you own and control, with TypeScript ergonomics similar to what you're used to. You'll trade Convex's automatic reactivity for infrastructure ownership and SQL flexibility. Companies like Groupon already use this approach to power their backends at scale.

What You're Migrating

| Convex Component | AWS Equivalent (via Encore) |

|---|---|

| Convex Database | Amazon RDS PostgreSQL |

| Convex Functions | Fargate |

| Convex Mutations | Encore API endpoints |

| Convex Functions | Encore API endpoints |

| File Storage | Amazon S3 |

| Scheduled Functions | CloudWatch Events |

The biggest shift is from Convex's reactive queries to a traditional request-response model. More on that later.

Why Teams Migrate from Convex

Infrastructure ownership: Convex runs on their infrastructure. You can't access it, configure it, or integrate it with other systems in your VPC. With AWS, you own everything and can connect to existing resources.

SQL instead of documents: Convex uses a document model. PostgreSQL gives you relational modeling with foreign keys, joins, and the full SQL ecosystem. Complex queries that require multiple Convex reads become single SQL statements.

Cost predictability: Convex pricing scales with usage in ways that can be hard to predict. AWS reserved capacity gives you cost stability.

No platform dependency: Your code runs on standard AWS services rather than Convex's proprietary runtime.

What Encore Handles For You

When you deploy to AWS through Encore Cloud, every resource gets production defaults: private VPC placement, least-privilege IAM roles, encryption at rest, automated backups where applicable, and CloudWatch logging. You don't configure this per resource. It's automatic.

Encore follows AWS best practices and gives you guardrails. You can review infrastructure changes before they're applied, and everything runs in your own AWS account so you maintain full control.

Here's what that looks like in practice:

import { SQLDatabase } from "encore.dev/storage/sqldb";

import { Bucket } from "encore.dev/storage/objects";

import { Topic } from "encore.dev/pubsub";

import { CronJob } from "encore.dev/cron";

const db = new SQLDatabase("main", { migrations: "./migrations" });

const uploads = new Bucket("uploads", { versioned: false });

const events = new Topic<OrderEvent>("events", { deliveryGuarantee: "at-least-once" });

const _ = new CronJob("daily-cleanup", { schedule: "0 0 * * *", endpoint: cleanup });

This provisions RDS, S3, SNS/SQS, and CloudWatch Events with proper networking, IAM, and monitoring. You write TypeScript or Go, Encore handles the Terraform. The only Encore-specific parts are the import statements. Your business logic is standard TypeScript, so you're not locked in.

See the infrastructure primitives docs for the full list of supported resources.

The Reactivity Tradeoff

Convex's biggest feature is automatic reactivity. When data changes, queries re-run and your UI updates. You don't write this:

// Convex queries are reactive by default

const tasks = useQuery(api.tasks.list);

// UI automatically updates when any task changes

With Encore (and most backends), you get data on request. For real-time updates, you need WebSockets, polling, or push notifications. This is more work, but it's also more explicit and controllable.

If your app heavily relies on real-time updates, plan for this change. If you mainly use Convex as a database with occasional real-time features, the migration is more straightforward.

Step 1: Migrate Your Database

Export Your Data

Use Convex's export functionality:

npx convex export --path ./convex-backup

This gives you JSON files with your document data.

Design Your PostgreSQL Schema

Convex schemas map to relational tables. References become foreign keys.

Convex schema:

// convex/schema.ts

import { defineSchema, defineTable } from "convex/server";

import { v } from "convex/values";

export default defineSchema({

users: defineTable({

email: v.string(),

name: v.string(),

}).index("by_email", ["email"]),

tasks: defineTable({

text: v.string(),

isCompleted: v.boolean(),

userId: v.id("users"),

}).index("by_user", ["userId"]),

});

PostgreSQL schema:

-- migrations/001_initial.up.sql

CREATE TABLE users (

id TEXT PRIMARY KEY DEFAULT gen_random_uuid()::text,

email TEXT UNIQUE NOT NULL,

name TEXT NOT NULL,

created_at TIMESTAMPTZ DEFAULT NOW()

);

CREATE TABLE tasks (

id TEXT PRIMARY KEY DEFAULT gen_random_uuid()::text,

text TEXT NOT NULL,

is_completed BOOLEAN DEFAULT false,

user_id TEXT NOT NULL REFERENCES users(id) ON DELETE CASCADE,

created_at TIMESTAMPTZ DEFAULT NOW()

);

CREATE INDEX tasks_user_idx ON tasks(user_id);

The index on userId in Convex becomes a foreign key with an index in PostgreSQL. The relationship is now enforced at the database level.

Set Up the Encore Database

import { SQLDatabase } from "encore.dev/storage/sqldb";

const db = new SQLDatabase("main", {

migrations: "./migrations",

});

That's the complete database definition. Encore analyzes this at compile time and provisions RDS PostgreSQL when you deploy.

Write a Migration Script

Transform your exported JSON into SQL inserts:

// scripts/migrate-data.ts

import * as fs from "fs";

import { Pool } from "pg";

const pg = new Pool({ connectionString: process.env.DATABASE_URL });

const data = JSON.parse(fs.readFileSync("./convex-backup/data.json", "utf8"));

async function migrate() {

// Migrate users first (tasks reference them)

for (const user of data.users) {

await pg.query(

`INSERT INTO users (id, email, name, created_at) VALUES ($1, $2, $3, $4)`,

[user._id, user.email, user.name, new Date(user._creationTime)]

);

}

// Then migrate tasks

for (const task of data.tasks) {

await pg.query(

`INSERT INTO tasks (id, text, is_completed, user_id, created_at) VALUES ($1, $2, $3, $4, $5)`,

[task._id, task.text, task.isCompleted, task.userId, new Date(task._creationTime)]

);

}

console.log("Migration complete");

}

migrate().catch(console.error);

Step 2: Convert Convex Queries to Encore APIs

Convex queries become Encore API endpoints. The structure changes from reactive subscriptions to request-response.

Before (Convex query):

// convex/tasks.ts

import { query } from "./_generated/server";

import { v } from "convex/values";

export const list = query({

args: { userId: v.id("users") },

handler: async (ctx, args) => {

return await ctx.db

.query("tasks")

.withIndex("by_user", (q) => q.eq("userId", args.userId))

.collect();

},

});

After (Encore API):

import { api } from "encore.dev/api";

import { getAuthData } from "~encore/auth";

interface Task {

id: string;

text: string;

isCompleted: boolean;

createdAt: Date;

}

export const listTasks = api(

{ method: "GET", path: "/tasks", expose: true, auth: true },

async (): Promise<{ tasks: Task[] }> => {

const auth = getAuthData()!;

const rows = await db.query<Task>`

SELECT id, text, is_completed as "isCompleted", created_at as "createdAt"

FROM tasks

WHERE user_id = ${auth.userID}

ORDER BY created_at DESC

`;

const tasks: Task[] = [];

for await (const task of rows) {

tasks.push(task);

}

return { tasks };

}

);

With SQL, you can write queries that would require multiple Convex operations:

// Get tasks with user info in one query

export const getTasksWithUsers = api(

{ method: "GET", path: "/tasks/with-users", expose: true, auth: true },

async (): Promise<{ tasks: TaskWithUser[] }> => {

const rows = await db.query<TaskWithUser>`

SELECT t.id, t.text, t.is_completed as "isCompleted",

u.name as "userName", u.email as "userEmail"

FROM tasks t

JOIN users u ON t.user_id = u.id

ORDER BY t.created_at DESC

LIMIT 50

`;

const tasks: TaskWithUser[] = [];

for await (const task of rows) {

tasks.push(task);

}

return { tasks };

}

);

Step 3: Convert Convex Mutations to Encore APIs

Mutations become POST/PATCH/DELETE endpoints.

Before (Convex mutation):

// convex/tasks.ts

import { mutation } from "./_generated/server";

import { v } from "convex/values";

export const create = mutation({

args: { text: v.string() },

handler: async (ctx, args) => {

const identity = await ctx.auth.getUserIdentity();

if (!identity) throw new Error("Not authenticated");

return await ctx.db.insert("tasks", {

text: args.text,

isCompleted: false,

userId: identity.subject,

});

},

});

export const complete = mutation({

args: { id: v.id("tasks") },

handler: async (ctx, args) => {

await ctx.db.patch(args.id, { isCompleted: true });

},

});

After (Encore API):

export const createTask = api(

{ method: "POST", path: "/tasks", expose: true, auth: true },

async (req: { text: string }): Promise<Task> => {

const auth = getAuthData()!;

const task = await db.queryRow<Task>`

INSERT INTO tasks (text, is_completed, user_id)

VALUES (${req.text}, false, ${auth.userID})

RETURNING id, text, is_completed as "isCompleted", created_at as "createdAt"

`;

return task!;

}

);

export const completeTask = api(

{ method: "PATCH", path: "/tasks/:id/complete", expose: true, auth: true },

async ({ id }: { id: string }): Promise<Task> => {

const auth = getAuthData()!;

// Ensure user owns the task

const task = await db.queryRow<Task>`

UPDATE tasks

SET is_completed = true

WHERE id = ${id} AND user_id = ${auth.userID}

RETURNING id, text, is_completed as "isCompleted", created_at as "createdAt"

`;

if (!task) {

throw new Error("Task not found or access denied");

}

return task;

}

);

export const deleteTask = api(

{ method: "DELETE", path: "/tasks/:id", expose: true, auth: true },

async ({ id }: { id: string }): Promise<{ deleted: boolean }> => {

const auth = getAuthData()!;

await db.exec`

DELETE FROM tasks WHERE id = ${id} AND user_id = ${auth.userID}

`;

return { deleted: true };

}

);

Step 4: Migrate Authentication

Convex integrates with auth providers like Clerk, Auth0, and others. You can keep using the same provider with Encore.

If you're using Clerk:

import { authHandler, Gateway } from "encore.dev/auth";

import { createRemoteJWKSet, jwtVerify } from "jose";

const JWKS = createRemoteJWKSet(

new URL("https://your-clerk-instance.clerk.accounts.dev/.well-known/jwks.json")

);

interface AuthParams {

authorization: string;

}

interface AuthData {

userID: string;

email: string;

}

export const auth = authHandler<AuthParams, AuthData>(

async (params) => {

const token = params.authorization.replace("Bearer ", "");

const { payload } = await jwtVerify(token, JWKS);

return {

userID: payload.sub as string,

email: (payload.email as string) || "",

};

}

);

export const gateway = new Gateway({ authHandler: auth });

Your frontend auth flow stays the same. Only the backend verification changes.

Step 5: Migrate File Storage

Convex's file storage becomes S3:

Before (Convex):

// Upload

const storageId = await ctx.storage.store(file);

// Get URL

const url = await ctx.storage.getUrl(storageId);

After (Encore):

import { Bucket } from "encore.dev/storage/objects";

const files = new Bucket("files", { versioned: false });

export const uploadFile = api(

{ method: "POST", path: "/files", expose: true },

async (req: { filename: string; data: Buffer; contentType: string }) => {

await files.upload(req.filename, req.data, {

contentType: req.contentType,

});

return { url: files.publicUrl(req.filename) };

}

);

export const getFileUrl = api(

{ method: "GET", path: "/files/:filename/url", expose: true },

async ({ filename }: { filename: string }) => {

const exists = await files.exists(filename);

if (!exists) throw new Error("File not found");

return { url: files.publicUrl(filename) };

}

);

Step 6: Migrate Scheduled Functions

Convex cron jobs become Encore CronJobs:

Before (Convex):

// convex/crons.ts

import { cronJobs } from "convex/server";

const crons = cronJobs();

crons.daily(

"cleanup-completed-tasks",

{ hourUTC: 2, minuteUTC: 0 },

"tasks:cleanupCompleted"

);

export default crons;

After (Encore):

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

export const cleanupCompleted = api(

{ method: "POST", path: "/internal/cleanup" },

async (): Promise<{ deleted: number }> => {

const result = await db.exec`

DELETE FROM tasks

WHERE is_completed = true

AND created_at < NOW() - INTERVAL '30 days'

`;

return { deleted: result.rowsAffected };

}

);

const _ = new CronJob("cleanup-completed-tasks", {

title: "Clean up old completed tasks",

schedule: "0 2 * * *", // 2 AM UTC daily

endpoint: cleanupCompleted,

});

Step 7: Handle Real-time (if needed)

If your app relies on Convex's real-time features, you have options:

Polling: The simplest approach. Have your frontend poll for updates every few seconds. Works fine for many use cases.

Pub/Sub for backend events: Use Encore's Pub/Sub to trigger backend actions when data changes:

import { Topic, Subscription } from "encore.dev/pubsub";

interface TaskEvent {

taskId: string;

userId: string;

action: "created" | "completed" | "deleted";

}

export const taskEvents = new Topic<TaskEvent>("task-events", {

deliveryGuarantee: "at-least-once",

});

// Publish in your mutation

export const createTask = api(

{ method: "POST", path: "/tasks", expose: true, auth: true },

async (req: { text: string }): Promise<Task> => {

const auth = getAuthData()!;

const task = await db.queryRow<Task>`...`;

// Publish event for subscribers

await taskEvents.publish({

taskId: task!.id,

userId: auth.userID,

action: "created",

});

return task!;

}

);

// Subscribe to handle events

const _ = new Subscription(taskEvents, "notify-collaborators", {

handler: async (event) => {

await notifyCollaborators(event.userId, event.taskId, event.action);

},

});

WebSocket streaming: Encore supports streaming APIs for real-time client connections. See the streaming docs.

Step 8: Deploy to AWS

- Connect your AWS account in the Encore Cloud dashboard. See the AWS setup guide for details.

- Push your code:

git push encore main - Run your data migration script against the RDS instance

- Update your frontend to use the new API endpoints

What Gets Provisioned

- RDS PostgreSQL for your database

- S3 for file storage

- Fargate for compute

- CloudWatch Events for scheduled functions

- SNS/SQS for Pub/Sub (if used)

- CloudWatch for logs and metrics

Migration Checklist

- Export Convex data

- Design PostgreSQL schema

- Create Encore app with migrations

- Write and test data migration script

- Convert queries to GET endpoints

- Convert mutations to POST/PATCH/DELETE endpoints

- Set up auth handler with your existing provider

- Migrate file storage

- Convert scheduled functions to cron jobs

- Plan for real-time updates (polling, Pub/Sub, or WebSockets)

- Update frontend to call REST API instead of Convex client

- Test in preview environment

- Deploy and run data migration

- Monitor and verify

Wrapping Up

Migrating from Convex to AWS means trading automatic reactivity for infrastructure ownership and SQL flexibility. If your app is heavily real-time, plan for the extra work to implement that layer. If you mainly use Convex as a typed database with occasional real-time features, the migration is more straightforward.

Encore maintains good TypeScript ergonomics while giving you control over where and how your code runs.