How to Migrate from Render to GCP

Move from Render's managed platform to your own Google Cloud account

Render provides simple deployments, but you don't control the infrastructure. When you need VPC networking, compliance controls, or access to Google Cloud services, migrating to your own GCP account makes sense.

The traditional path to GCP means learning Terraform, writing infrastructure config, and becoming your own DevOps team. That's a big jump from Render's simplicity.

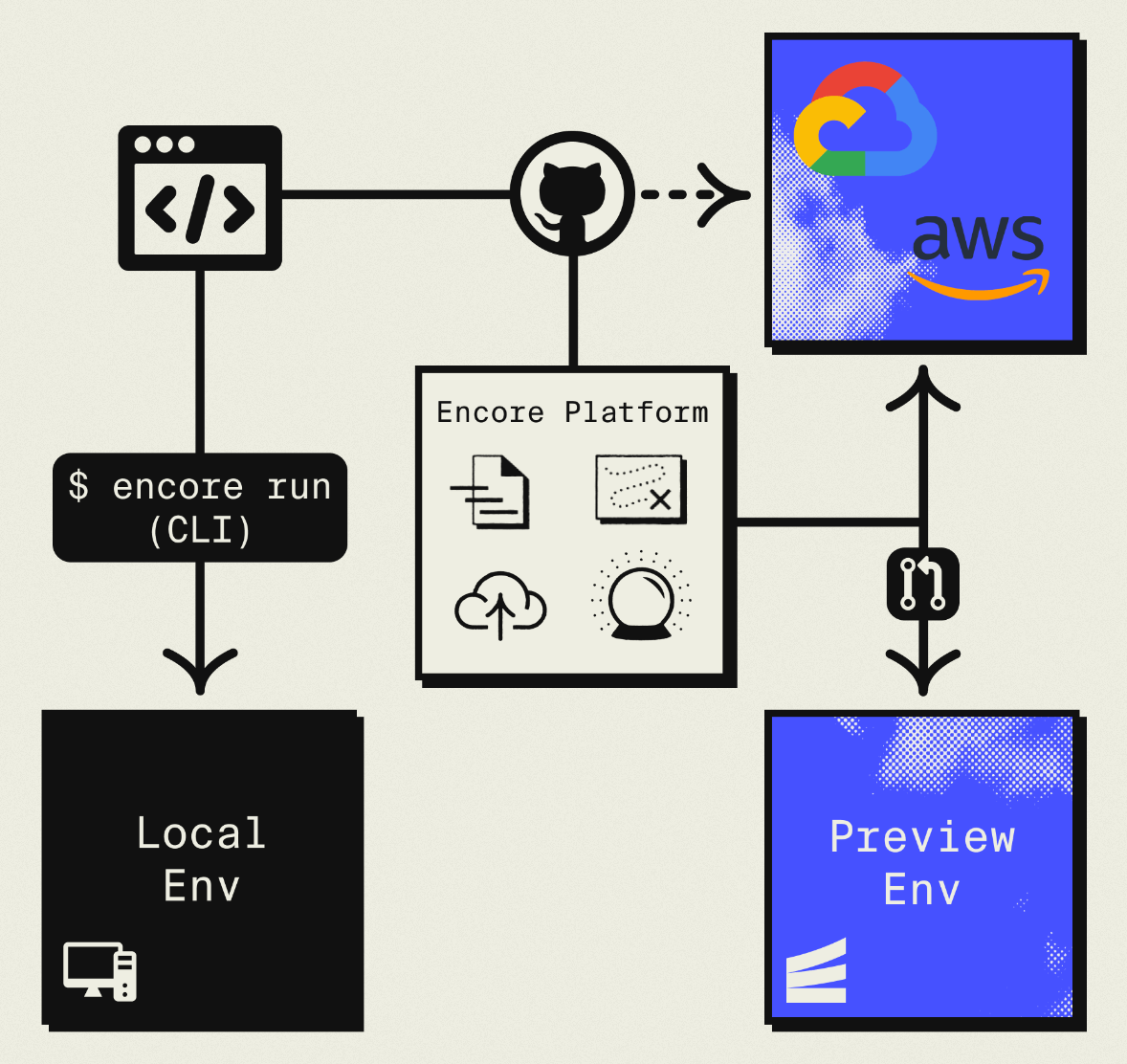

This guide takes a different approach: migrating to your own GCP project using Encore and Encore Cloud. Encore is an open-source TypeScript backend framework (11k+ GitHub stars) where you define infrastructure as type-safe objects in your code: databases, Pub/Sub, cron jobs, object storage. Encore Cloud then provisions these resources in your GCP project using managed services like Cloud SQL, GCP Pub/Sub, and Cloud Storage.

The result is GCP infrastructure you own and control, but with a developer experience similar to Render: push code, get a deployment. No Terraform to learn, no YAML to maintain. Companies like Groupon already use this approach to power their backends at scale.

What You're Migrating

| Render Component | GCP Equivalent (via Encore) |

|---|---|

| Web Services | Cloud Run |

| PostgreSQL | Cloud SQL |

| Redis | Pub/Sub or Memorystore |

| Cron Jobs | Cloud Scheduler + Cloud Run |

| Private Services | Internal Cloud Run |

| Background Workers | Pub/Sub subscribers |

Why GCP?

Cloud Run performance: Cloud Run has fast cold starts (often under 100ms for Node.js) and scales to zero when idle. The developer experience is similar to Render.

GCP ecosystem: Direct access to BigQuery for analytics, Vertex AI for machine learning, Firestore for document storage, and other Google services.

Sustained use discounts: GCP automatically reduces costs as your usage increases. No reserved capacity purchases required.

Infrastructure control: VPC, IAM, Cloud Armor, and other GCP security and networking features.

What Encore Handles For You

When you deploy to GCP through Encore Cloud, every resource gets production defaults: VPC placement, least-privilege IAM service accounts, encryption at rest, automated backups where applicable, and Cloud Logging. You don't configure this per resource. It's automatic.

Encore follows GCP best practices and gives you guardrails. You can review infrastructure changes before they're applied, and everything runs in your own GCP project so you maintain full control.

Here's what that looks like in practice:

import { SQLDatabase } from "encore.dev/storage/sqldb";

import { Bucket } from "encore.dev/storage/objects";

import { Topic } from "encore.dev/pubsub";

import { CronJob } from "encore.dev/cron";

const db = new SQLDatabase("main", { migrations: "./migrations" });

const uploads = new Bucket("uploads", { versioned: false });

const events = new Topic<OrderEvent>("events", { deliveryGuarantee: "at-least-once" });

const _ = new CronJob("daily-cleanup", { schedule: "0 0 * * *", endpoint: cleanup });

This provisions Cloud SQL, GCS, Pub/Sub, and Cloud Scheduler with proper networking, IAM, and monitoring. You write TypeScript or Go, Encore handles the Terraform. The only Encore-specific parts are the import statements. Your business logic is standard TypeScript, so you're not locked in.

See the infrastructure primitives docs for the full list of supported resources.

Step 1: Migrate Your Services

Render services become Encore APIs that deploy to Cloud Run:

render.yaml:

services:

- type: web

name: api

runtime: node

buildCommand: npm install && npm run build

startCommand: npm start

Encore:

import { api } from "encore.dev/api";

export const getUser = api(

{ method: "GET", path: "/users/:id", expose: true },

async ({ id }: { id: string }): Promise<User | null> => {

const user = await db.queryRow<User>`

SELECT id, email, name, created_at as "createdAt"

FROM users WHERE id = ${id}

`;

return user;

}

);

export const listUsers = api(

{ method: "GET", path: "/users", expose: true },

async (): Promise<{ users: User[] }> => {

const rows = await db.query<User>`

SELECT id, email, name, created_at as "createdAt"

FROM users

ORDER BY created_at DESC

LIMIT 100

`;

const users: User[] = [];

for await (const user of rows) {

users.push(user);

}

return { users };

}

);

No configuration files needed. Encore analyzes your code and provisions Cloud Run services automatically.

Step 2: Migrate PostgreSQL to Cloud SQL

Both use PostgreSQL, so the migration is a data transfer rather than a schema conversion.

Export from Render

# Get connection string from Render dashboard

pg_dump "postgresql://user:pass@dpg-xxxx.render.com/mydb" > backup.sql

Set Up the Encore Database

import { SQLDatabase } from "encore.dev/storage/sqldb";

const db = new SQLDatabase("main", {

migrations: "./migrations",

});

That's the complete database definition. Encore analyzes this at compile time and provisions Cloud SQL PostgreSQL when you deploy.

Import to Cloud SQL

After your first Encore deploy:

# Get the Cloud SQL connection string

encore db conn-uri main --env=production

# Import your data

psql "postgresql://user:pass@/main?host=/cloudsql/project:region:instance" < backup.sql

Encore handles the Cloud SQL proxy connection automatically.

Step 3: Migrate Cron Jobs

Render cron services become Encore CronJobs:

render.yaml:

services:

- type: cron

name: daily-report

schedule: "0 9 * * *"

buildCommand: npm install

startCommand: node generate-report.js

Encore:

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

export const generateReport = api(

{ method: "POST", path: "/internal/daily-report" },

async (): Promise<{ sent: boolean }> => {

const stats = await gatherDailyStats();

await sendReportEmail(stats);

return { sent: true };

}

);

const _ = new CronJob("daily-report", {

title: "Generate and send daily report",

schedule: "0 9 * * *",

endpoint: generateReport,

});

On GCP, this provisions Cloud Scheduler to trigger your Cloud Run service.

Step 4: Migrate Background Workers

Render background workers (separate services processing jobs) become Pub/Sub subscribers:

Before (Render worker with Bull):

// worker.js - separate Render service

import Queue from "bull";

const processQueue = new Queue("process", process.env.REDIS_URL);

processQueue.process(async (job) => {

await processItem(job.data.itemId);

});

After (Encore):

import { Topic, Subscription } from "encore.dev/pubsub";

import { api } from "encore.dev/api";

interface ProcessingJob {

itemId: string;

action: string;

}

const processingQueue = new Topic<ProcessingJob>("processing", {

deliveryGuarantee: "at-least-once",

});

// Enqueue jobs from your API

export const startProcessing = api(

{ method: "POST", path: "/items/:id/process", expose: true },

async ({ id }: { id: string }): Promise<{ queued: boolean }> => {

await processingQueue.publish({ itemId: id, action: "process" });

return { queued: true };

}

);

// Process jobs automatically

const _ = new Subscription(processingQueue, "process-items", {

handler: async (job) => {

await processItem(job.itemId);

},

});

The subscription handler runs for each message. Failed messages retry with exponential backoff. On GCP, this uses native GCP Pub/Sub.

Step 5: Migrate Environment Variables

Render environment variables become Encore secrets:

# Set secrets

encore secret set --type=production StripeKey

encore secret set --type=production SendgridKey

Use them in code:

import { secret } from "encore.dev/config";

const stripeKey = secret("StripeKey");

// Use the secret

const stripe = new Stripe(stripeKey());

Step 6: Deploy to GCP

- Connect your GCP project in the Encore Cloud dashboard. See the GCP setup guide for details.

- Push your code:

git push encore main - Import database data after the first deploy

- Update DNS to point to your new endpoints

- Verify and remove Render services

What Gets Provisioned

Encore creates in your GCP project:

- Cloud Run for your services

- Cloud SQL PostgreSQL for databases

- GCP Pub/Sub for messaging

- Cloud Scheduler for cron jobs

- Cloud Logging for application logs

- IAM service accounts with least-privilege access

You can view and manage these in the GCP Console.

Migration Checklist

- Inventory Render services and their dependencies

- Export PostgreSQL database

- Create Encore app with service structure

- Set up database migrations

- Import data to Cloud SQL

- Convert cron jobs to CronJob

- Convert workers to Pub/Sub subscribers

- Migrate environment variables to secrets

- Test in preview environment

- Update DNS

- Remove Render services

Wrapping Up

Cloud Run's scaling and cold start performance make it a natural fit for teams migrating from Render. You get similar developer experience with full GCP infrastructure control.