How to Migrate from Supabase to GCP

Move your backend to your own Google Cloud account for more control and lower costs at scale

Supabase bundles a lot into one platform: Postgres, auth, storage, edge functions, and real-time subscriptions. That's convenient for getting started. But as your application grows, you might want more control over infrastructure, better cost visibility, or access to Google Cloud services that Supabase doesn't expose.

The traditional path to GCP means learning Terraform, writing infrastructure config, and stitching together Cloud SQL, Cloud Run, and Cloud Storage yourself. That's a big jump from Supabase's integrated experience.

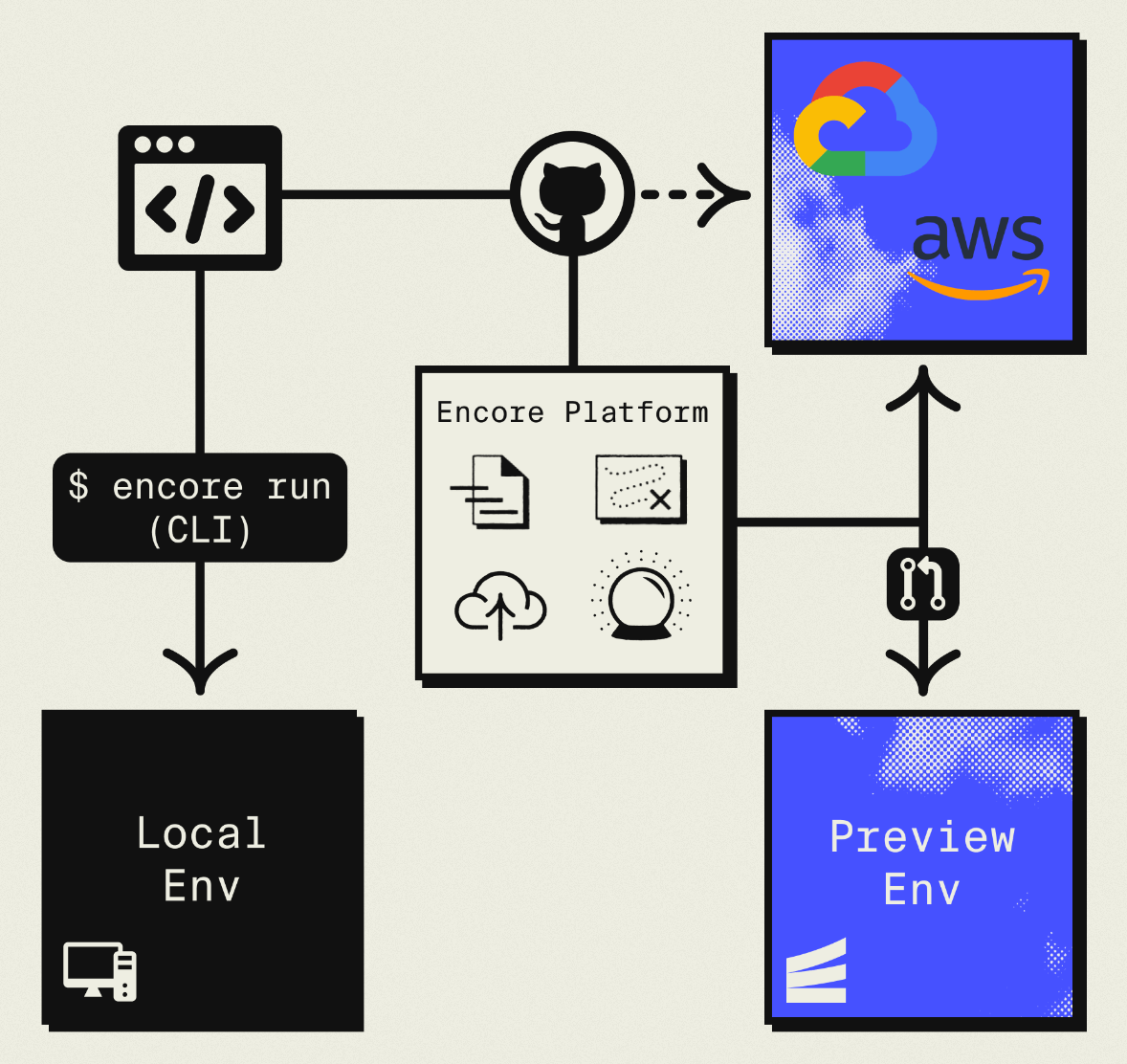

This guide takes a different approach: migrating to your own GCP project using Encore and Encore Cloud. Encore is an open-source TypeScript backend framework (11k+ GitHub stars) where you define infrastructure as type-safe objects in your code: databases, Pub/Sub, cron jobs, object storage. Encore Cloud then provisions these resources in your GCP project using managed services like Cloud SQL, GCP Pub/Sub, and Cloud Storage.

The result is GCP infrastructure you own and control, but without the DevOps overhead. You write backend code, Encore handles the rest. Companies like Groupon already use this approach to power their backends at scale.

What You're Migrating

| Supabase Component | GCP Equivalent (via Encore) |

|---|---|

| PostgreSQL Database | Cloud SQL PostgreSQL |

| Auth (GoTrue) | Encore Auth (or Clerk, WorkOS, etc.) |

| Storage | Google Cloud Storage |

| Edge Functions | Cloud Run |

| Realtime | GCP Pub/Sub |

Why GCP?

Sustained use discounts: GCP automatically reduces costs as your usage increases. No reserved capacity purchases required. If you're already paying for compute, you get discounts automatically.

Google Cloud ecosystem: If you're using BigQuery for analytics, Vertex AI for machine learning, or other GCP services, running your backend on GCP simplifies networking and reduces egress costs.

Data residency: GCP offers regions that Supabase may not support. Important for compliance requirements.

Existing GCP credits: Many startups have GCP credits through Google for Startups or other programs.

Cloud Run performance: Cloud Run has fast cold starts (often under 100ms for Node.js) and scales to zero when idle.

What Encore Handles For You

When you deploy to GCP through Encore Cloud, every resource gets production defaults: VPC placement, least-privilege IAM service accounts, encryption at rest, automated backups where applicable, and Cloud Logging. You don't configure this per resource. It's automatic.

Encore follows GCP best practices and gives you guardrails. You can review infrastructure changes before they're applied, and everything runs in your own GCP project so you maintain full control.

Here's what that looks like in practice:

import { SQLDatabase } from "encore.dev/storage/sqldb";

import { Bucket } from "encore.dev/storage/objects";

import { Topic } from "encore.dev/pubsub";

import { CronJob } from "encore.dev/cron";

const db = new SQLDatabase("main", { migrations: "./migrations" });

const uploads = new Bucket("uploads", { versioned: false });

const events = new Topic<OrderEvent>("events", { deliveryGuarantee: "at-least-once" });

const _ = new CronJob("daily-cleanup", { schedule: "0 0 * * *", endpoint: cleanup });

This provisions Cloud SQL, GCS, Pub/Sub, and Cloud Scheduler with proper networking, IAM, and monitoring. You write TypeScript or Go, Encore handles the Terraform. The only Encore-specific parts are the import statements. Your business logic is standard TypeScript, so you're not locked in.

See the infrastructure primitives docs for the full list of supported resources.

Step 1: Migrate Your Database

Supabase uses PostgreSQL, and so does Cloud SQL. The migration is data transfer, not schema conversion.

Export from Supabase

Get your connection string from the Supabase dashboard (Settings > Database), then export:

pg_dump -h db.xxxx.supabase.co -U postgres -d postgres > backup.sql

You'll be prompted for the database password from your Supabase settings.

Set Up the Encore Database

import { SQLDatabase } from "encore.dev/storage/sqldb";

const db = new SQLDatabase("main", {

migrations: "./migrations",

});

That's the complete database definition. Encore analyzes this at compile time and provisions Cloud SQL PostgreSQL when you deploy.

Put your migration files in ./migrations. If you don't have them, generate from your current schema:

pg_dump --schema-only "your-supabase-connection" > migrations/001_initial.up.sql

Import to Cloud SQL

After your first Encore deploy, Cloud SQL is provisioned automatically. Get the connection and import:

# Get the Cloud SQL connection string

encore db conn-uri main --env=production

# Import the backup

psql "your-cloud-sql-connection" < backup.sql

Update Your Queries

The main change is replacing Supabase's JavaScript client with SQL queries:

Before (Supabase client):

const { data, error } = await supabase

.from('products')

.select('id, name, price')

.order('created_at', { ascending: false })

.limit(20);

After (Encore):

import { api } from "encore.dev/api";

import { SQLDatabase } from "encore.dev/storage/sqldb";

const db = new SQLDatabase("main", { migrations: "./migrations" });

interface Product {

id: string;

name: string;

price: number;

createdAt: Date;

}

export const listProducts = api(

{ method: "GET", path: "/products", expose: true },

async (): Promise<{ products: Product[] }> => {

const rows = await db.query<Product>`

SELECT id, name, price, created_at as "createdAt"

FROM products

ORDER BY created_at DESC

LIMIT 20

`;

const products: Product[] = [];

for await (const row of rows) {

products.push(row);

}

return { products };

}

);

With SQL, you can write queries that would require multiple Supabase calls:

// Get products with inventory counts in one query

export const getProductsWithInventory = api(

{ method: "GET", path: "/products/inventory", expose: true },

async (): Promise<{ products: ProductWithInventory[] }> => {

const rows = await db.query<ProductWithInventory>`

SELECT p.id, p.name, p.price,

COALESCE(SUM(i.quantity), 0) as "totalStock"

FROM products p

LEFT JOIN inventory i ON p.id = i.product_id

GROUP BY p.id

ORDER BY p.created_at DESC

`;

const products: ProductWithInventory[] = [];

for await (const row of rows) {

products.push(row);

}

return { products };

}

);

Step 2: Migrate Authentication

Supabase Auth handles signup, login, OAuth, and sessions. You can implement this with Encore's auth handler or use a third-party service.

Custom Auth Implementation

import { authHandler, Gateway } from "encore.dev/auth";

import { api, APIError } from "encore.dev/api";

import { SignJWT, jwtVerify } from "jose";

import { verify, hash } from "@node-rs/argon2";

import { secret } from "encore.dev/config";

const jwtSecret = secret("JWTSecret");

interface AuthParams {

authorization: string;

}

interface AuthData {

userID: string;

email: string;

}

export const auth = authHandler<AuthParams, AuthData>(

async (params) => {

const token = params.authorization.replace("Bearer ", "");

try {

const { payload } = await jwtVerify(

token,

new TextEncoder().encode(jwtSecret())

);

return {

userID: payload.sub as string,

email: payload.email as string,

};

} catch {

throw APIError.unauthenticated("Invalid token");

}

}

);

export const gateway = new Gateway({ authHandler: auth });

// Login endpoint

export const login = api(

{ method: "POST", path: "/auth/login", expose: true },

async (req: { email: string; password: string }): Promise<{ token: string }> => {

const user = await db.queryRow<{ id: string; email: string; passwordHash: string }>`

SELECT id, email, password_hash as "passwordHash"

FROM users WHERE email = ${req.email}

`;

if (!user || !(await verify(user.passwordHash, req.password))) {

throw APIError.unauthenticated("Invalid credentials");

}

const token = await new SignJWT({ email: user.email })

.setProtectedHeader({ alg: "HS256" })

.setSubject(user.id)

.setIssuedAt()

.setExpirationTime("7d")

.sign(new TextEncoder().encode(jwtSecret()));

return { token };

}

);

Keep Using Supabase Auth Temporarily

During migration, you can verify Supabase tokens in your Encore auth handler:

import { createRemoteJWKSet, jwtVerify } from "jose";

const SUPABASE_JWKS = createRemoteJWKSet(

new URL("https://your-project.supabase.co/auth/v1/.well-known/jwks.json")

);

export const auth = authHandler<AuthParams, AuthData>(

async (params) => {

const token = params.authorization.replace("Bearer ", "");

const { payload } = await jwtVerify(token, SUPABASE_JWKS);

return {

userID: payload.sub as string,

email: payload.email as string,

};

}

);

Step 3: Migrate Storage

Supabase Storage becomes Google Cloud Storage:

import { Bucket } from "encore.dev/storage/objects";

const uploads = new Bucket("uploads", {

versioned: false,

});

export const uploadFile = api(

{ method: "POST", path: "/files", expose: true, auth: true },

async (req: { filename: string; data: Buffer; contentType: string }): Promise<{ url: string }> => {

await uploads.upload(req.filename, req.data, {

contentType: req.contentType,

});

return { url: uploads.publicUrl(req.filename) };

}

);

export const deleteFile = api(

{ method: "DELETE", path: "/files/:filename", expose: true, auth: true },

async ({ filename }: { filename: string }): Promise<{ deleted: boolean }> => {

await uploads.remove(filename);

return { deleted: true };

}

);

Migrate Existing Files

# Download from Supabase (S3-compatible)

aws s3 sync s3://your-supabase-bucket ./backup \

--endpoint-url https://your-project.supabase.co/storage/v1/s3

# Upload to GCS after Encore deployment

gsutil -m rsync -r ./backup gs://your-encore-bucket

Step 4: Migrate Edge Functions to Cloud Run

Supabase Edge Functions use Deno. Encore APIs use Node.js/TypeScript and deploy to Cloud Run:

Before (Supabase Edge Function):

import { serve } from "https://deno.land/std@0.168.0/http/server.ts";

serve(async (req) => {

const { items } = await req.json();

const total = items.reduce((sum: number, item: any) => sum + item.price, 0);

return new Response(JSON.stringify({ total }));

});

After (Encore):

import { api } from "encore.dev/api";

interface CartItem {

productId: string;

price: number;

quantity: number;

}

export const calculateCart = api(

{ method: "POST", path: "/cart/calculate", expose: true },

async (req: { items: CartItem[] }): Promise<{ total: number; itemCount: number }> => {

const total = req.items.reduce(

(sum, item) => sum + item.price * item.quantity,

0

);

const itemCount = req.items.reduce((sum, item) => sum + item.quantity, 0);

return { total, itemCount };

}

);

If your Edge Functions use Deno-specific APIs, you'll need Node.js equivalents.

Step 5: Migrate Realtime to GCP Pub/Sub

Supabase Realtime provides WebSocket subscriptions for database changes. Replace with explicit Pub/Sub:

Before (Supabase Realtime):

supabase

.channel('inventory')

.on('postgres_changes',

{ event: 'UPDATE', schema: 'public', table: 'products' },

(payload) => console.log('Product updated:', payload)

)

.subscribe();

After (Encore Pub/Sub):

import { Topic, Subscription } from "encore.dev/pubsub";

interface InventoryEvent {

productId: string;

newQuantity: number;

timestamp: Date;

}

export const inventoryChanges = new Topic<InventoryEvent>("inventory-changes", {

deliveryGuarantee: "at-least-once",

});

// Publish when inventory changes

export const updateInventory = api(

{ method: "PATCH", path: "/products/:productId/inventory", expose: true, auth: true },

async ({ productId, quantity }: { productId: string; quantity: number }): Promise<{ success: boolean }> => {

await db.exec`UPDATE products SET quantity = ${quantity} WHERE id = ${productId}`;

await inventoryChanges.publish({

productId,

newQuantity: quantity,

timestamp: new Date(),

});

return { success: true };

}

);

// Subscribe to handle events

const _ = new Subscription(inventoryChanges, "check-reorder", {

handler: async (event) => {

if (event.newQuantity < 10) {

await sendReorderAlert(event.productId);

}

},

});

On GCP, this provisions native GCP Pub/Sub topics and subscriptions.

Step 6: Deploy to GCP

- Connect your GCP project in the Encore Cloud dashboard. See the GCP setup guide for details.

- Push your code:

git push encore main - Run data migrations (database import, file sync)

- Update your frontend to use new API endpoints

- Update DNS

What Gets Provisioned

Encore creates in your GCP project:

- Cloud SQL PostgreSQL for databases

- Google Cloud Storage for file storage

- GCP Pub/Sub for messaging

- Cloud Run for your APIs

- Cloud Logging for logs

- IAM service accounts with minimal permissions

You have full access through the Google Cloud Console.

Migration Checklist

- Export Supabase database

- Set up Encore database with migrations

- Import data to Cloud SQL

- Decide on auth approach (custom or third-party)

- Implement auth handler

- Sync files from Supabase Storage to GCS

- Convert Edge Functions to Encore APIs

- Replace Realtime with Pub/Sub

- Update frontend to use new API

- Test in preview environment

- Update DNS

- Monitor for issues

Wrapping Up

Migrating from Supabase to GCP gives you infrastructure ownership while staying in a familiar ecosystem. Cloud Run's performance is comparable to Edge Functions, and Cloud SQL runs the same PostgreSQL you're used to. Encore handles the GCP provisioning while you focus on the application code.