How to Migrate from Heroku to GCP

Move from Heroku to your own Google Cloud account with a simple deployment workflow

Heroku offered simple deployments, but you didn't control the infrastructure. With Heroku now in maintenance mode after 19 years, teams need to migrate. Google Cloud Platform is a strong option: Cloud Run has fast cold starts, scales to zero when idle, and GCP offers sustained use discounts without reserved capacity purchases.

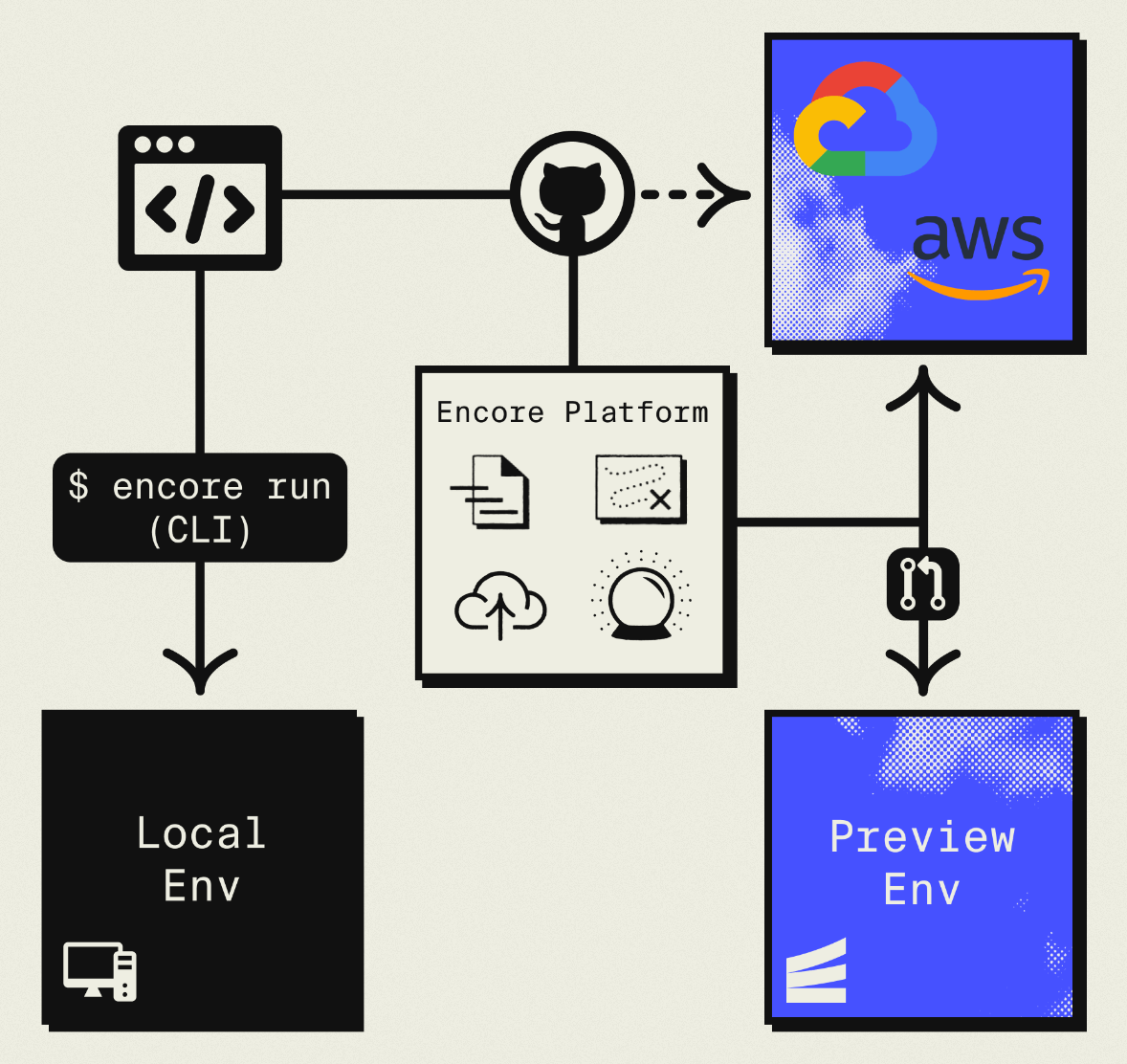

The traditional path to GCP means learning Terraform, writing infrastructure config, and managing your own DevOps. This guide takes a different approach: migrating to your own GCP project using Encore and Encore Cloud. Encore is an open-source TypeScript backend framework (11k+ GitHub stars) where you define infrastructure as type-safe objects in your code: databases, Pub/Sub, cron jobs, object storage. Encore Cloud then provisions these resources in your GCP project using managed services like Cloud SQL, GCP Pub/Sub, and Cloud Storage.

The result is GCP infrastructure you own and control, with a simple deployment workflow: push code, get a deployment. No Terraform to learn, no YAML to maintain. Companies like Groupon already use this approach to power their backends at scale.

What You're Migrating

| Heroku Component | GCP Equivalent (via Encore) |

|---|---|

| Web Dynos | Cloud Run |

| Worker Dynos | Pub/Sub subscribers |

| Heroku Postgres | Cloud SQL |

| Heroku Redis | Pub/Sub (for queues) or self-managed Redis |

| Heroku Scheduler | Cloud Scheduler + Cloud Run |

| Config Vars | Encore Secrets |

| Pipelines | Encore Environments |

| Review Apps | Preview Environments |

| Add-ons | GCP Services |

Why GCP?

If you're choosing between AWS and GCP for your Heroku migration, GCP has a few advantages. Cloud Run has fast cold starts (often under 100ms for Node.js) and scales to zero when idle, making the developer experience the closest to Heroku's dynos in the cloud provider world. You also get direct access to GCP services like BigQuery for analytics, Vertex AI for machine learning, and Firestore for document storage. GCP's pricing tends to be more straightforward than AWS, with fewer hidden costs for data transfer and API calls, and sustained use discounts apply automatically as your usage increases.

What Encore Handles For You

When you deploy to GCP through Encore Cloud, every resource gets production defaults: VPC placement, least-privilege IAM service accounts, encryption at rest, automated backups where applicable, and Cloud Logging. You don't configure this per resource, it's automatic.

Encore follows GCP best practices and gives you guardrails so you can review infrastructure changes before they're applied, and everything runs in your own GCP project so you maintain full control.

import { SQLDatabase } from "encore.dev/storage/sqldb";

import { Bucket } from "encore.dev/storage/objects";

import { Topic } from "encore.dev/pubsub";

import { CronJob } from "encore.dev/cron";

const db = new SQLDatabase("main", { migrations: "./migrations" });

const uploads = new Bucket("uploads", { versioned: false });

const events = new Topic<OrderEvent>("events", { deliveryGuarantee: "at-least-once" });

const _ = new CronJob("daily-cleanup", { schedule: "0 0 * * *", endpoint: cleanup });

This provisions Cloud SQL, GCS, Pub/Sub, and Cloud Scheduler with proper networking, IAM, and monitoring. You write TypeScript or Go, Encore handles the Terraform. The only Encore-specific parts are the import statements. Your business logic is standard TypeScript, so you're not locked in. For teams using AI agents like Cursor or Claude Code, this means infrastructure doesn't drift from your application logic.

See the infrastructure primitives docs for the full list of supported resources.

Step 1: Migrate Your Web Dynos

Heroku uses a Procfile to define processes. With Encore, infrastructure is defined by your code and deploys to Cloud Run.

Heroku Procfile:

web: npm start

Encore equivalent:

import { api } from "encore.dev/api";

// Each endpoint gets built-in tracing, metrics, and API docs automatically.

export const getUser = api(

{ method: "GET", path: "/users/:id", expose: true },

async ({ id }: { id: string }): Promise<User | null> => {

const user = await db.queryRow<User>`

SELECT id, email, name, created_at as "createdAt"

FROM users WHERE id = ${id}

`;

return user;

}

);

export const listUsers = api(

{ method: "GET", path: "/users", expose: true },

async (): Promise<{ users: User[] }> => {

const rows = await db.query<User>`

SELECT id, email, name, created_at as "createdAt"

FROM users

ORDER BY created_at DESC

LIMIT 100

`;

const users: User[] = [];

for await (const user of rows) {

users.push(user);

}

return { users };

}

);

No Procfile, no Dockerfile, no configuration files needed. Encore analyzes your code and provisions Cloud Run services automatically, which scale to zero when idle and start up fast.

If you have multiple Heroku apps, create separate Encore services:

// api/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("api");

// billing/encore.service.ts

import { Service } from "encore.dev/service";

export default new Service("billing");

Services can call each other with type-safe imports:

import { billing } from "~encore/clients";

// Call the billing service, fully type-safe

const invoice = await billing.getInvoice({ orderId: "123" });

Step 2: Migrate Heroku Postgres to Cloud SQL

Both use PostgreSQL, so the migration is a data transfer rather than a schema conversion.

Export from Heroku

# Get the database URL

heroku config:get DATABASE_URL -a your-app

# Export using Heroku's built-in backup

heroku pg:backups:capture -a your-app

heroku pg:backups:download -a your-app

# Or use pg_dump directly

pg_dump "your-heroku-database-url" > backup.sql

Set Up the Encore Database

import { SQLDatabase } from "encore.dev/storage/sqldb";

const db = new SQLDatabase("main", {

migrations: "./migrations",

});

That's the complete database definition, and Encore analyzes this at compile time to provision Cloud SQL PostgreSQL when you deploy.

Put your existing migration files in ./migrations. If you don't have migration files, create them from your current schema:

# Generate schema-only dump

pg_dump --schema-only "your-heroku-database-url" > migrations/001_initial.up.sql

Import to Cloud SQL

After your first Encore deploy:

# Get the Cloud SQL connection string

encore db conn-uri main --env=production

# Import your data

psql "postgresql://user:pass@/main?host=/cloudsql/project:region:instance" < backup.sql

Encore handles the Cloud SQL proxy connection automatically.

Update Your Queries

If you were using an ORM like Prisma or Drizzle, it should work with minimal changes since the underlying database is still PostgreSQL. For raw queries, Encore provides type-safe tagged template queries:

interface Order {

id: string;

customerId: string;

total: number;

status: string;

createdAt: Date;

}

export const getRecentOrders = api(

{ method: "GET", path: "/orders/recent", expose: true },

async (): Promise<{ orders: Order[] }> => {

const rows = await db.query<Order>`

SELECT id, customer_id as "customerId", total, status, created_at as "createdAt"

FROM orders

WHERE created_at > NOW() - INTERVAL '30 days'

ORDER BY created_at DESC

`;

const orders: Order[] = [];

for await (const order of rows) {

orders.push(order);

}

return { orders };

}

);

Step 3: Migrate Worker Dynos

Heroku worker dynos are separate processes defined in your Procfile:

Heroku Procfile:

web: npm start

worker: node worker.js

With Encore, background workers become Pub/Sub subscribers. On GCP, this uses native GCP Pub/Sub with automatic retries and dead-letter handling.

Before (Heroku with Bull/Redis):

import Queue from "bull";

const processQueue = new Queue("process", process.env.REDIS_URL);

// Worker dyno

processQueue.process(async (job) => {

await processItem(job.data.itemId);

});

After (Encore):

import { Topic, Subscription } from "encore.dev/pubsub";

import { api } from "encore.dev/api";

interface ProcessingJob {

itemId: string;

action: string;

}

const processingQueue = new Topic<ProcessingJob>("processing", {

deliveryGuarantee: "at-least-once",

});

// Enqueue jobs from your API

export const startProcessing = api(

{ method: "POST", path: "/items/:id/process", expose: true },

async ({ id }: { id: string }): Promise<{ queued: boolean }> => {

await processingQueue.publish({ itemId: id, action: "process" });

return { queued: true };

}

);

// Process jobs automatically

const _ = new Subscription(processingQueue, "process-items", {

handler: async (job) => {

await processItem(job.itemId);

},

});

The subscription handler runs for each message and failed messages retry with exponential backoff, with no Redis instance to manage and no worker dyno to keep running.

Step 4: Migrate Heroku Redis

If you're using Heroku Redis beyond job queues:

For Caching

GCP options for caching:

-

Memorystore (Redis): Managed Redis on GCP. Provision via GCP Console and connect using Encore secrets for the connection string.

-

Database caching: For simple caching, a PostgreSQL table with TTL works fine. Add an index and a cron job to clean expired entries.

-

In-memory caching: For request-scoped or short-lived data, in-process caching might be sufficient.

For Real-Time Pub/Sub

Redis pub/sub maps directly to Encore's Topic and Subscription model. On GCP, this uses native GCP Pub/Sub, which is more scalable and reliable than Redis pub/sub.

Step 5: Migrate Heroku Scheduler

Heroku Scheduler tasks become Encore CronJobs that run on Cloud Scheduler:

Heroku Scheduler:

rake cleanup:expired_sessions Every day at 2:00 AM UTC

node scripts/send-digest.js Every day at 9:00 AM UTC

Encore:

import { CronJob } from "encore.dev/cron";

import { api } from "encore.dev/api";

export const cleanup = api(

{ method: "POST", path: "/internal/cleanup" },

async (): Promise<{ deleted: number }> => {

const result = await db.exec`

DELETE FROM sessions WHERE expires_at < NOW()

`;

return { deleted: result.rowsAffected };

}

);

const _ = new CronJob("daily-cleanup", {

title: "Clean up expired sessions",

schedule: "0 2 * * *",

endpoint: cleanup,

});

export const sendDigest = api(

{ method: "POST", path: "/internal/send-digest" },

async (): Promise<{ sent: number }> => {

const users = await getDigestSubscribers();

for (const user of users) {

await sendDigestEmail(user);

}

return { sent: users.length };

}

);

const __ = new CronJob("daily-digest", {

title: "Send daily email digest",

schedule: "0 9 * * *",

endpoint: sendDigest,

});

On GCP, this provisions Cloud Scheduler to trigger your Cloud Run service on schedule.

Step 6: Migrate Config Vars

Heroku config vars become Encore secrets:

Heroku:

heroku config:set STRIPE_SECRET_KEY=sk_live_...

heroku config:set SENDGRID_API_KEY=SG...

Encore:

encore secret set --type=production StripeSecretKey

encore secret set --type=production SendgridApiKey

Use them in code:

import { secret } from "encore.dev/config";

const stripeKey = secret("StripeSecretKey");

// Use the secret

const stripe = new Stripe(stripeKey());

Secrets are environment-specific and encrypted at rest.

Step 7: Migrate Heroku Pipelines

Heroku Pipelines give you staging and production environments. Encore has the same concept:

- Preview environments: Automatically created for each pull request (replaces Heroku Review Apps)

- Staging: A persistent environment for pre-production testing

- Production: Your live environment connected to your GCP project

Each environment gets its own set of infrastructure resources, fully isolated.

Step 8: Deploy to GCP

-

Connect your GCP project in the Encore Cloud dashboard. See the GCP setup guide for details.

-

Push your code:

git push encore main -

Import database data after the first deploy

-

Test in preview environment

-

Update DNS to point to your new endpoints

-

Verify and remove Heroku apps

What Gets Provisioned

Encore creates in your GCP project:

- Cloud Run for your services

- Cloud SQL PostgreSQL for databases

- GCP Pub/Sub for messaging

- Cloud Scheduler for cron jobs

- Cloud Storage for object storage (if you define buckets)

- Cloud Logging for application logs

- IAM service accounts with least-privilege access

You can view and manage these in the GCP Console.

Migration Checklist

- Inventory all Heroku apps and their dependencies

- Export Heroku Postgres database

- Create Encore app with service structure matching your Heroku apps

- Set up database schema and migrations

- Import data to Cloud SQL after first deploy

- Convert worker dynos to Pub/Sub subscribers

- Convert Heroku Scheduler jobs to CronJobs

- Migrate Redis usage (queue → Pub/Sub, cache → Memorystore)

- Move config vars to Encore secrets

- Set up environments (staging, production)

- Test in preview environment

- Update DNS

- Monitor for issues

- Delete Heroku apps

Wrapping Up

Cloud Run's scaling and cold start performance make GCP a natural fit for teams migrating from Heroku. You get a similar pay-for-what-you-use model with full infrastructure control. The code changes are minimal since the core concepts (services, databases, background jobs, cron) are the same.

The biggest shift is replacing Redis-backed job queues with GCP Pub/Sub. For most applications, this is a better fit: managed message delivery with automatic retries and dead-letter handling, no Redis instance to manage.